Research Domains

Numerical Methods and Software for Scientific Machine Learning

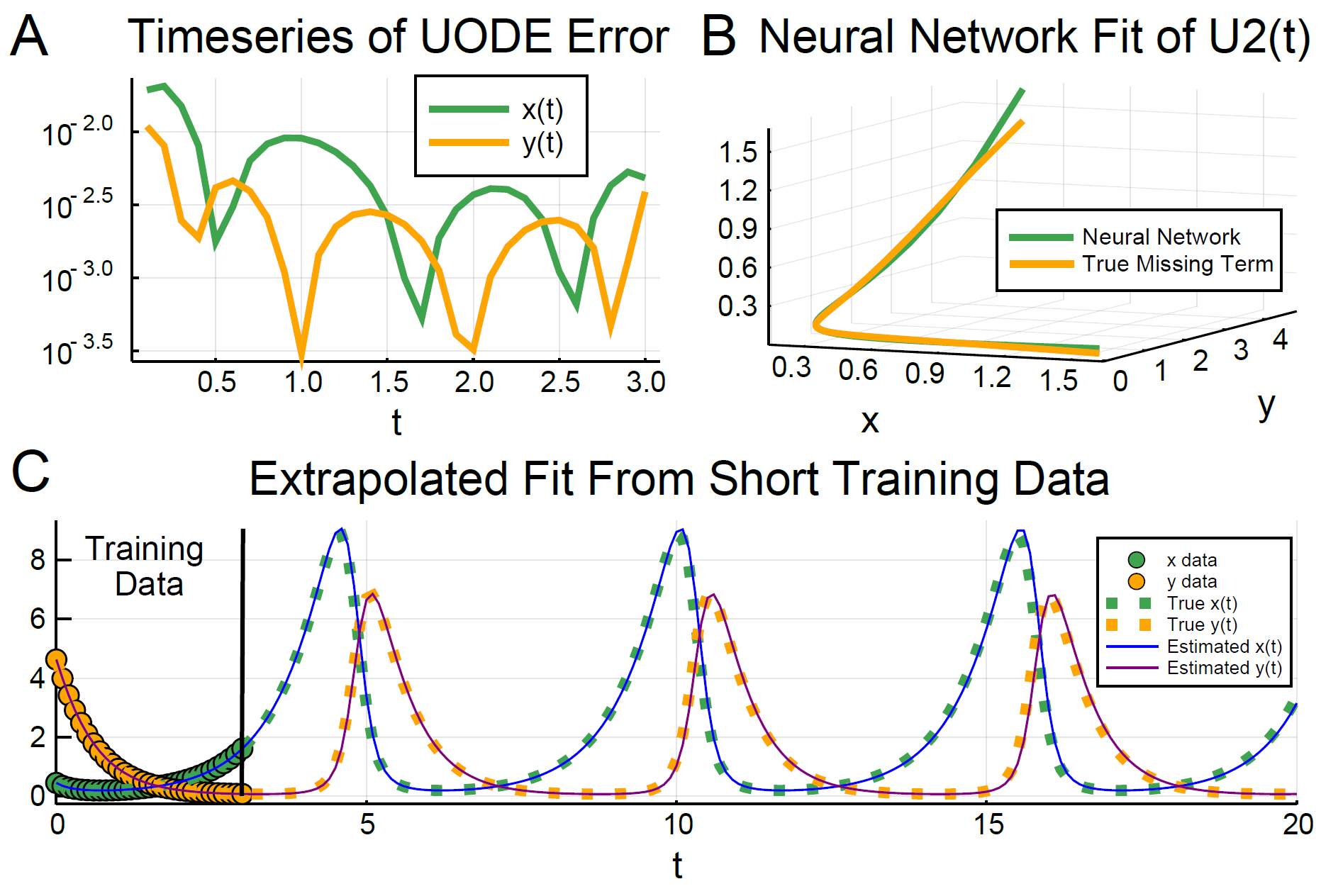

Modeling practice seems to be partitioned into scientific models defined by mechanistic differential equations and machine learning models defined by parameterizations of neural networks. While the ability for interpretable mechanistic models to extrapolate from little information is seemingly at odds with the big data "model-free" approach of neural networks, the next step in scientific progress is to utilize these methodologies together in order to emphasize their strengths while mitigating weaknesses. Chris' research is defining scientific AI: the integration of domain models (usually differential equations) with artificial intelligence techniques such as machine learning and probabilistic programming. Universal differential equations have been shown to be an approach that can merge these two worlds together, allowing for scientifically-informed artificial intelligence and machine learning. These techniques have also been shown to overcome the issues of traditional machine learning, with the ability to train from small data, extrapolate outside of the original dataset, and be interpreted back into physical mechanisms.

Dr. Rackauckas' work has lead to foundational software for scientific machine learning (scientific ML), including DiffEqFlux.jl, which allows researchers to train the vast array of differential equation models mixed with neural networks. This allows for neural ordinary differential equations (neural ODEs), neural stochastic differential equations (neural SDEs), neural delay differential equations (neural DDEs), neural differential-algebraic equations (neural DAEs), neural partial differential equations (neural PDEs and NeuralPDE.jl), and hybrid equations like neural jump diffusions (neural jump SDEs). For most of these equations, it is the first software to support neural network integration, and it does so while providing GPU-acceleration and all of the features of DifferentialEquations.jl, meaning stiff equations can be efficiently integrated with all of the state-of-the-art methods (automated sparsity detection and coloring, Jacobian-Free Newton Krylov, etc.). These Julia-based implementations show over an order magnitude performance improvement over comparable PyTorch libraries, over two orders of magnitude faster neural ODE fitting, and over three orders of magnitude performance advantage for SDE solving.

Chris' research is showcasing how machine learning integrated into differential equations can be used in many ways, including:

- Accelerating the solution of large sparse ODE and DAE systems and their inverse problems

- Automated data-driven discovery of dynamical equations, physical laws, and biological interactions

- Performing nonlinear optimal control via neural networks

In particular, Dr. Rackauckas' research has shown that classic deep learning techniques like recurrent neural netowrks (RNNs), long-short term networks (LSTMs) and more are incapable of learning on the highly stiff dynamical systems which are common in science and engineering. To solve these problems, Chris' research is mixing numerical analysis into machine learning to develop new architectures which are robust to stiffness, such as continuous-time echo state networks and stiff neural ordinary differential equations. Given the robustness of the new methods, this has led the field to be practically able to be automated for real-world usage, which has led to JuliaSim and the ModelingToolkit modeling language.

Scientific Machine Learning as Next Generation Healthcare

Recent biological techniques, such as next generation sequencing, have enabled the gathering of gigantic amounts of data. For example, microfluidics mixed with RNA-sequencing allows for the generation of entire genomic and transcriptomic profiles from each individual cell in a population, giving a way to quantify not only the chemical concentrations within a cell but also how it differs across cells. Electronic devices such as cellphones and wearables are gathering continuous data on individuals, who become patients. With all of this data, a physician checks a few variables, such as your height, weight, and gender, in order to prescribe a dose.

Chris' research is investigating how we can do better. By mixing nonlinear mixed effects models with machine learning and uncertainty quantification techniques, Chris is developing new methodologies to utilize the big data within the Electronic Health Records (EHR) to enable personalized precision medicine. This is showcased in the culmunating software, Pumas, which integrates an entire pharmacometric modeling stack into Julia with new techniques to be a practical Scientific AI for next generation data-driven healthcare.

High Performance Solving of Differential Equations

Differential equations are the foundation of the model simulators in Scientific AI. Chris is the lead developer of the DifferentialEquations.jl suite of solvers, which includes:

- Discrete equations (function maps, discrete stochastic (Gillespie/Markov) simulations)

- Ordinary differential equations (ODEs)

- Split and Partitioned ODEs (Symplectic integrators, IMEX Methods)

- Stochastic ordinary differential equations (SODEs or SDEs)

- Random differential equations (RODEs or RDEs)

- Differential algebraic equations (DAEs)

- Delay differential equations (DDEs)

- Mixed discrete and continuous equations (Hybrid Equations, Jump Diffusions)

- (Stochastic) partial differential equations ((S)PDEs) (with both finite difference and finite element methods)

The well-optimized DifferentialEquations solvers benchmark as the some of the fastest implementations, using classic algorithms and ones from recent research which routinely outperform the "standard" C/Fortran methods, and include algorithms optimized for high-precision and HPC applications. At the same time, it wraps the classic C/Fortran methods, making it easy to switch over to them whenever necessary. It integrates with the Julia package sphere, for example using Juno's progress meter, automatic plotting, built-in interpolations, and wraps other differential equation solvers so that many different methods for solving the equations can be accessed by simply switching a keyword argument. It utilizes Julia's generality to be able to solve problems specified with arbitrary number types (types with units like Unitful, and arbitrary precision numbers like BigFloats and ArbFloats), arbitrary sized arrays (ODEs on matrices), and more. This gives a powerful mixture of speed and productivity features to help you solve and analyze your differential equations faster.

Chris' research continues to advance DifferentialEquations.jl, including research into multi-GPU parallelization techniques for ODE and DAE solvers, adaptive stochastic differential equation integrators, uncertainty quantification of differential equations, and a lot more.